Cerebras vs NVIDIA: How Wafer-Scale Engines Are Revolutionizing AI Inference

By Angel Cooper

Introduction: The End of the GPU Monopoly?

For years, NVIDIA has been the undisputed king of AI computing. But a seismic shift is happening. OpenAI just signed a $10 billion deal with Cerebras Systems to deploy 750 megawatts of alternative compute through 2028. The first production model—GPT-5.3-Codex-Spark—is already live, running on Cerebras silicon and delivering over 1,000 tokens per second.

As someone who’s spent years building software and watching infrastructure evolve, I find this partnership fascinating. It’s not just about faster chips—it’s about solving fundamental bottlenecks in how AI systems deliver results to users.

Understanding the Architecture: Wafer-Scale vs. GPU Clusters

The Traditional GPU Approach

When you think of AI computing, you probably imagine rows of NVIDIA GPUs in massive data centers. And you’d be right—this is how most AI inference works today. But there’s a fundamental problem: memory bandwidth bottlenecks.

Here’s what happens inside a traditional GPU cluster:

- Multiple discrete chips: A typical AI server might have 8 NVIDIA H100 GPUs, each sitting on a separate piece of silicon

- External memory (HBM): Each GPU has its own High Bandwidth Memory (HBM) stacked nearby, but data must travel between chips

- Interconnect overhead: When an AI model spans multiple GPUs, data must shuffle between them via NVLink or PCIe

- Latency multiplication: These data transfers add milliseconds—sometimes seconds—to inference time

NVIDIA’s flagship Blackwell B200 packs 208 billion transistors. Impressive? Absolutely. But compared to what Cerebras offers, it’s a fundamentally different approach.

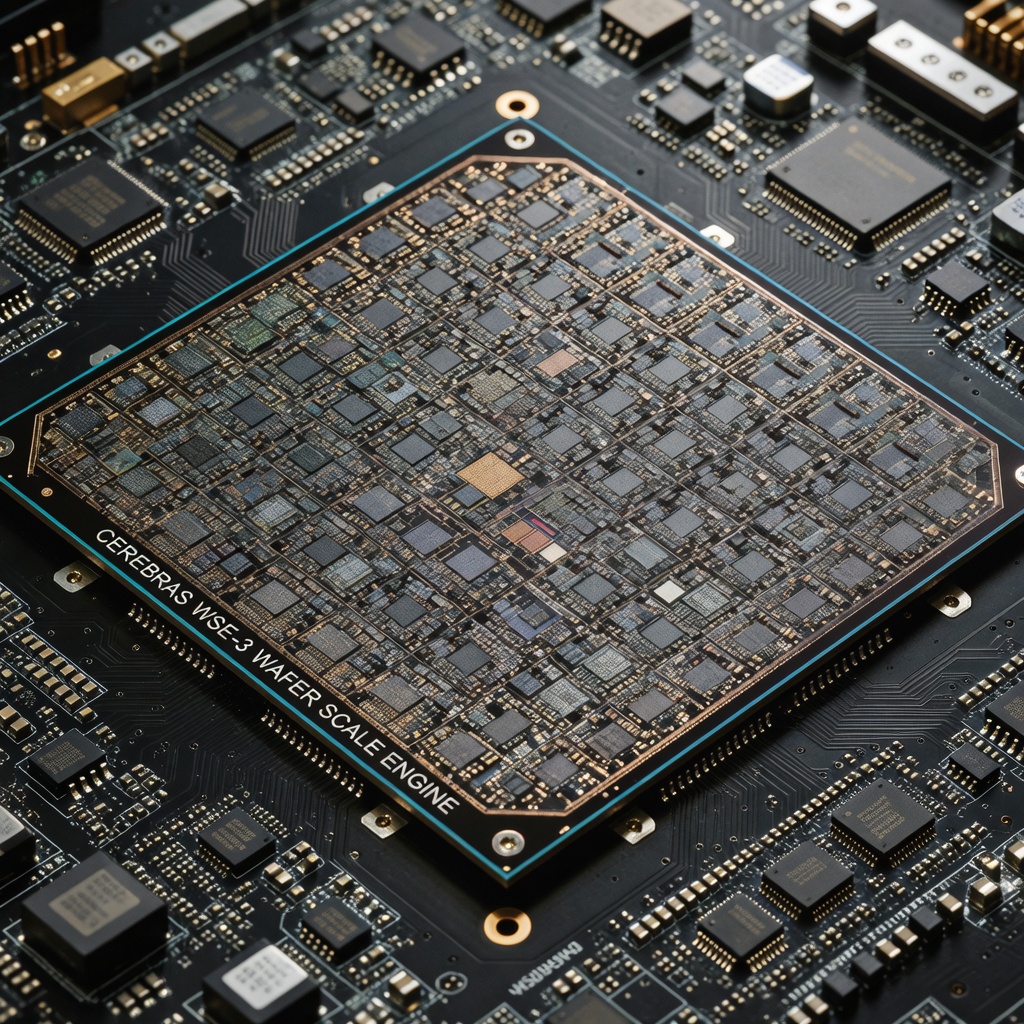

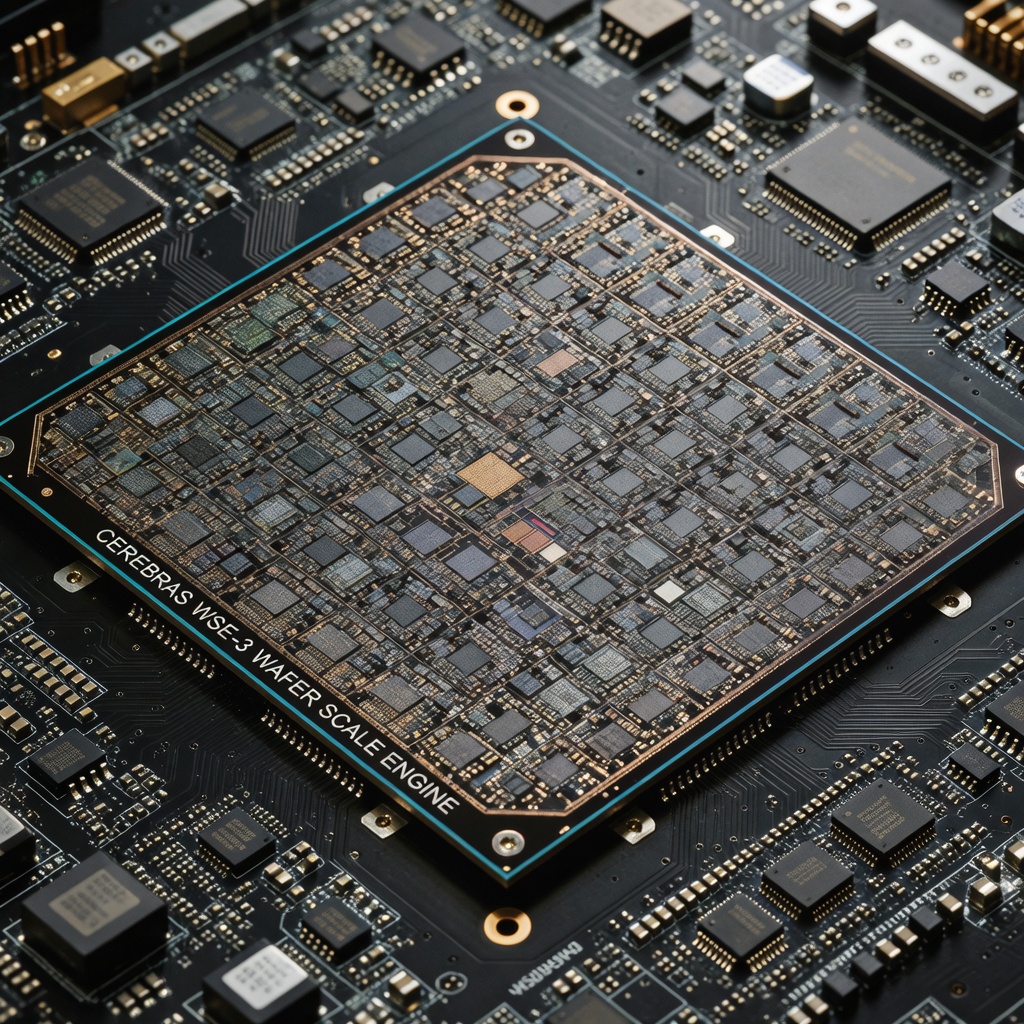

The Cerebras Revolution: One Wafer, One Chip

Cerebras takes the opposite approach. Instead of cutting a silicon wafer into hundreds of separate chips (as NVIDIA does), they keep the entire wafer as ONE massive processor. The WSE-3 (Wafer Scale Engine 3) is literally the size of a dinner plate—and it’s the largest chip ever built.

Let’s look at the numbers:

- 4 trillion transistors (19x more than NVIDIA B200)

- 900,000 AI cores on a single piece of silicon

- SRAM memory embedded directly on the chip—no external HBM needed

- Massive on-chip memory bandwidth that eliminates data transfer bottlenecks

The analogy I like: think of traditional GPU clusters as a city with traffic between neighborhoods. Cerebras eliminated the traffic entirely. All the “housing” (computation) and “warehouses” (memory) exist on one massive plot of land.

The $10 Billion Partnership: What OpenAI Is Buying

In January 2026, OpenAI announced a multi-year agreement with Cerebras valued at over $10 billion. Here’s what they’re getting:

- 750 megawatts of compute capacity coming online in phases through 2028

- Dedicated inference infrastructure optimized for low-latency workloads

- Access to WSE-3 systems for production AI serving

But here’s what makes this deal significant: it’s not about replacing NVIDIA. OpenAI explicitly stated: “GPUs remain foundational across our training and inference pipelines and deliver the most cost-effective tokens.”

This is a portfolio strategy—right chip for the right job.

Why Now? The Inference Era

The timing is crucial. As AI models have improved, inference (actually using the model to generate responses) has become the dominant computational workload. Consider:

- ChatGPT serves millions of users daily—each query requires inference

- Coding assistants like Codex run continuous inference sessions

- AI agents will make thousands of inference calls per task

When AI responds in real time, users do more with it, stay longer, and run higher-value workloads. OpenAI called this the “broadband moment” for AI—the same technology, but speed unlocks entirely new use cases.

Technical Deep Dive: SRAM vs. HBM

One of the key differences between Cerebras and NVIDIA lies in memory architecture:

NVIDIA: High Bandwidth Memory (HBM)

- Memory stacked vertically near the GPU die

- Extremely high bandwidth, but still requires data movement

- Mature ecosystem with years of optimization

- Cost-effective at scale for certain workloads

Cerebras: Embedded SRAM

- Static RAM directly integrated onto the wafer

- No data movement = zero memory transfer latency

- Massive bandwidth because everything is on-chip

- More expensive per chip, but faster inference

The trade-off: for tasks where speed matters more than cost per token, Cerebras wins. For bulk processing where latency doesn’t matter, traditional GPUs remain more economical.

Coding in Real-Time: The First Product

The first visible product of this partnership is GPT-5.3-Codex-Spark, launched in February 2026. This isn’t a frontier model—it’s a specialized, lightweight version optimized for speed.

Key specifications:

- 1,000+ tokens per second (up to 15x faster than standard Codex)

- 128,000 token context window

- Available to ChatGPT Pro subscribers as research preview

- Text-only at launch (vision capabilities coming)

Early user feedback describes the experience as “insane,” “near-instant,” and “game-changing.” Viral demos show Spark building complete applications in seconds while standard models are still generating.

But there are trade-offs:

- Lower intelligence: Benchmarks show ~58% on Terminal-Bench 2.0 vs. 77% for full Codex

- Context limitations: The 128k window forces frequent “context compaction”

- Narrow scope: Best for quick hits, not deep reasoning tasks

The lesson: this isn’t about replacing powerful models—it’s about having the right tool for the right task.

The Hybrid Future: How AI Companies Are Hedging

What OpenAI is doing represents a broader industry trend: diversification. They’re not putting all their chips in one basket.

OpenAI’s Three-Pronged Strategy:

- Cerebras: $10B for ultra-low-latency inference (750MW through 2028)

- AMD: Tens of billions for MI450 GPUs (6+ gigawatts)

- Broadcom: Custom silicon development partnership

NVIDIA remains the foundation—but specialized players are carving out niches.

Why Diversify?

- Supply constraints: NVIDIA chips are sold out through 2027

- Risk management: No company wants 100% dependency on one vendor

- Cost vs. speed: Sometimes speed matters more than cost efficiency

- Negotiating power: Having alternatives strengthens bargaining position

Market Implications: The $10B Question

Let’s put this in perspective:

- NVIDIA’s data center revenue: $51 billion in a single quarter

- The entire Cerebras deal: roughly five weeks of NVIDIA’s data center business

- Cerebras valuation: $23 billion (pre-IPO), with private shares hitting $105 (implying $27B)

NVIDIA is NOT being replaced—not even close. But the monopoly era is fragmenting.

As one analyst put it: “The ‘GPU-only’ era of AI is ending. When OpenAI diversifies away from NVIDIA, pay attention.”

Looking Ahead: What This Means for AI

The Cerebras partnership signals a fundamental shift in how we think about AI infrastructure:

- Specialization over generalization: Different chips for different tasks

- Latency as a feature: Speed enables entirely new use cases

- Infrastructure is the moat: Who builds the best AI data centers wins

- The inference era: Training was the bottleneck; now inference is

For developers and businesses, this means AI tools will get faster, more responsive, and more specialized. The days of waiting minutes for AI to “think” are ending.

For the industry, it means the $500+ billion AI infrastructure buildout is just beginning—and it’s multi-vendor.

Conclusion

The Cerebras-NVIDIA comparison isn’t about winners and losers. It’s about recognizing that the AI compute market is large enough for specialized solutions to thrive alongside general-purpose GPUs.

NVIDIA remains the king of training and general inference. But for ultra-low-latency workloads—real-time coding, interactive agents, instant responses—wafer-scale engines are proving there’s a better way.

The $10 billion bet by OpenAI isn’t just about faster ChatGPT responses. It’s a statement: the future of AI infrastructure will be heterogeneous, specialized, and fast.

And that future is arriving faster than anyone expected.

Angel Cooper is a technology enthusiast and software engineer passionate about emerging technologies, AI infrastructure, and the future of computing.